The active physical assessment of devices that may be implemented in a telehealth program can be beneficial to an organization in a variety of ways including:

- Development of a better understanding of the technology

- Clarification of device strengths and weaknesses

- Creation of assessment expertise within staff

Some organizations may feel that the testing of medical equipment is too difficult to do without engineers, specialty lab configurations, and large budgets. While there may be some types of assessments that benefit from these resources, many devices can be evaluated with existing personnel and facilities, and at minimal expense (especially when compared to the high cost of a poor technology selection).

It is important to spend time ensuring that the correct devices are brought in for testing, to plan out the exact tests that each device will perform, and to be consistent when executing your test plan. Work done early in the planning and testing stage will help create a smoother device evaluation period, and will make analysis of the results more meaningful.

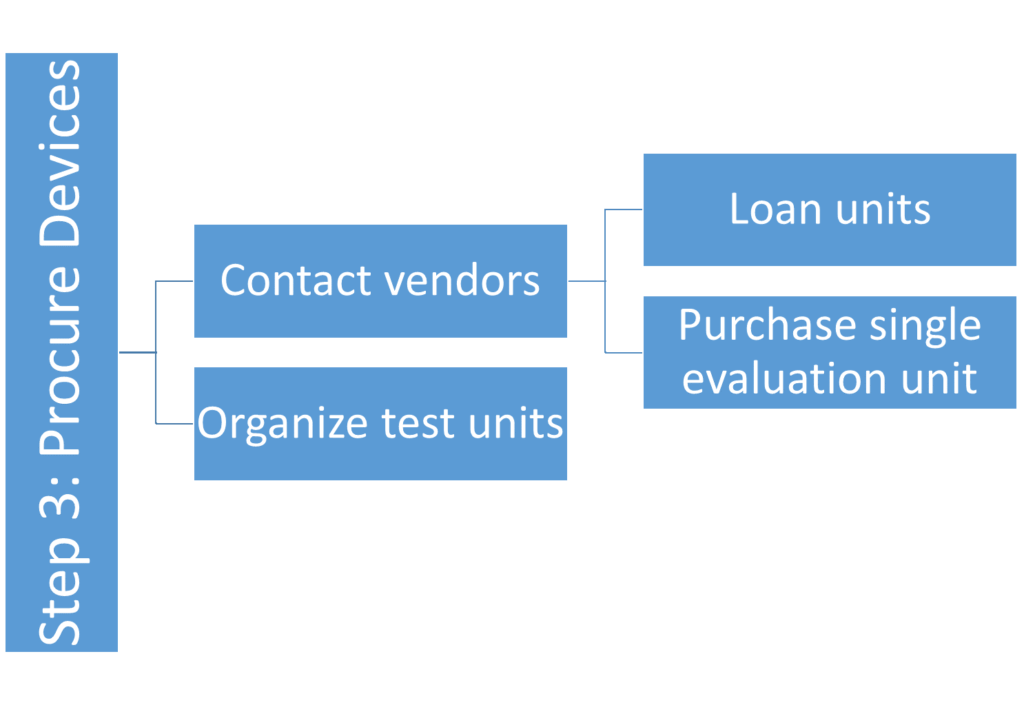

Step 3: Procure Devices

Figure 6. Step 3: Procure Devices

Ideally, your organization has already performed the work that is described in the Information Gathering section of this toolkit before moving on to the device ordering stage of the evaluation process. A list of organizational requirements, generated from user profiles, use cases, and known needs, should be compared to the output of the market review. Devices that clearly will not meet the basic requirements of the organization can be removed from the list.

At this point, a set of devices that could potentially meet the needs of the telehealth system should be gathered together. Additional work may be done to further reduce the list, considering topics such as:

- Devices from same manufacturer

- Budget

- Other scoping elements (minimum / maximum price)

The process of procuring devices for testing will vary in part based upon vendor relationships, equipment budgets, and organizational procedures for acquiring equipment. Some vendors and manufacturers will be willing to loan equipment for evaluation purposes. It is also important to be aware of any specific terms in the loaning agreement about loan duration, obligations for shipping and damages, and consumables that might not be included in the loan.

It is recommended that all equipment be obtained so that it is available for testing at the same time. This will reduce the amount of “churn” associated with assessing the devices, and will improve the overall testing experience. A list of other required equipment should be created and purchased, including:

- Connectors and cables

- Measuring devices

- Additional computer equipment

Keep devices organized as they are received; placing equipment, cables, and manuals into clear, plastic totes can help keep everything together. Larger assessments that include many devices and pieces of supporting equipment benefit immensely from a system of organization.

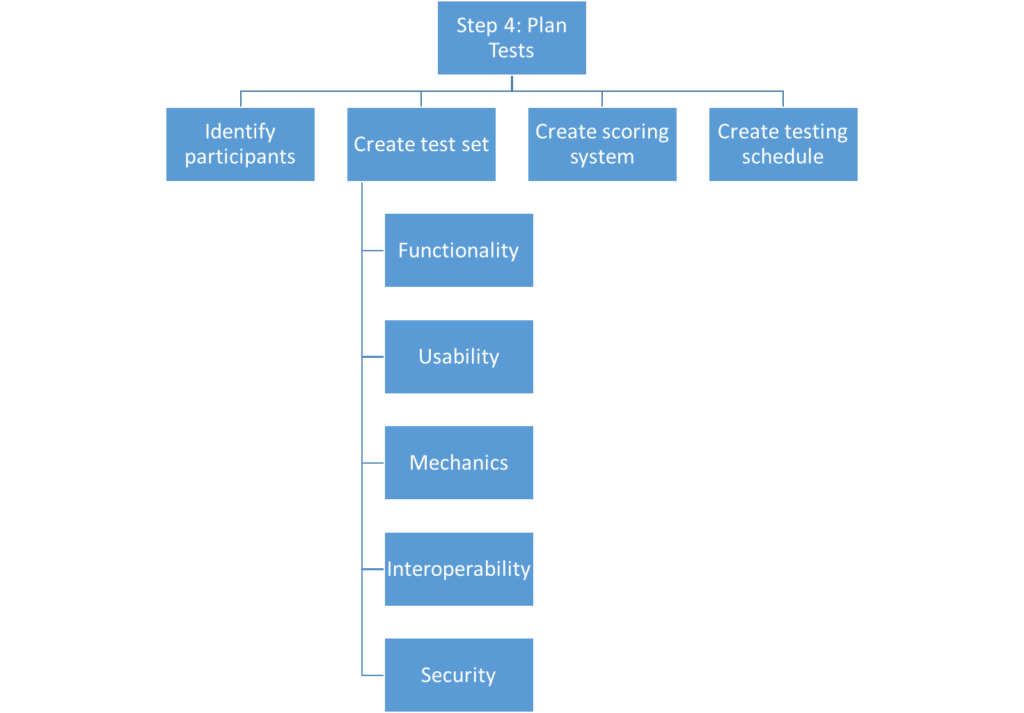

Step 4. Plan Tests

If thorough requirements were created as part of your needs assessment, much of the work involved in assembling a test plan has already been done.

Working with a team representing the various user profiles generated during the information gathering section of this process, create a set of tests that will evaluate how well the devices can meet the requirements established during the needs assessment. The tests may vary by device, but should generally address concerns surrounding:

- Functionality –how well does the device perform the intended task? This may include an evaluation of images, audio, or other data gathered by the device.

- Usability – how easily can a person perform the tasks needed for proper use of the device? This may include a set of basic interactions with the device that typify a users’ experience when using the device.

- Mechanics – how well is the device constructed? This may include a basic, objective assessment of the product and its components. Accessibility of ports, durability of materials and cables, battery life, and other similar topics may be included. In extreme cases, stress testing that involves destructive testing may be required (drop tests, immersion tests, etc.).

- Interoperability – how well does the device work with other systems? Many devices used in telemedicine are expected to communicate with videoconferencing platforms, computers, store-and-forward systems, and, increasingly, electronic health records. It is important to test how well the devices work in a real-world environment, as even the best standards sometimes fail to ensure that products will communicate as advertised.

- Security – how is patient information collected, stored, transmitted, and protected on the device? It is important to consider your requirements for physical, personnel, and network security. Special care should be taken for devices that have networking functionality, like wireless connectivity, Bluetooth or cellular access. Involving your information security team in creating these criteria is a prudent decision that can save a lot of time, effort, and cost in the future.

After the basic criteria for testing is established, discuss how the devices will be scored. Depending on the product being evaluated, this may include a simple Yes/No rating, or some variation of a numerical score. A smaller span of numbers, such as from 1 through 3, may be used to indicate that a product fails to meet basic needs, meets basic needs, or exceeds basic needs. Additional number may be used, depending on the intent of the testers. A 1-4 rating system helps prevent excessively rating a product as simply “meeting needs”, which can be useful for some subjective test measures about image or sound quality. A balance should be struck between simplicity in testing and useable information.

Discuss the rating system and the individual testing criteria with all people who will be involved in testing. Ensure that everyone who will participate in the process understands what is expected of them, and that they agree on how devices should be rated. Individual interpretations of the meaning of scores may create some discrepancies in how devices are compared (some individuals may be very hesitant to give a product a top-level score, while others may do so for any device that meets their expectations). An agreement up front may reduce the amount of confusion later in the process.

Establish a testing schedule up front. If testing must be spread across multiple days and weeks, create targets for what will be done in a single day, grouping similar testing goals together.

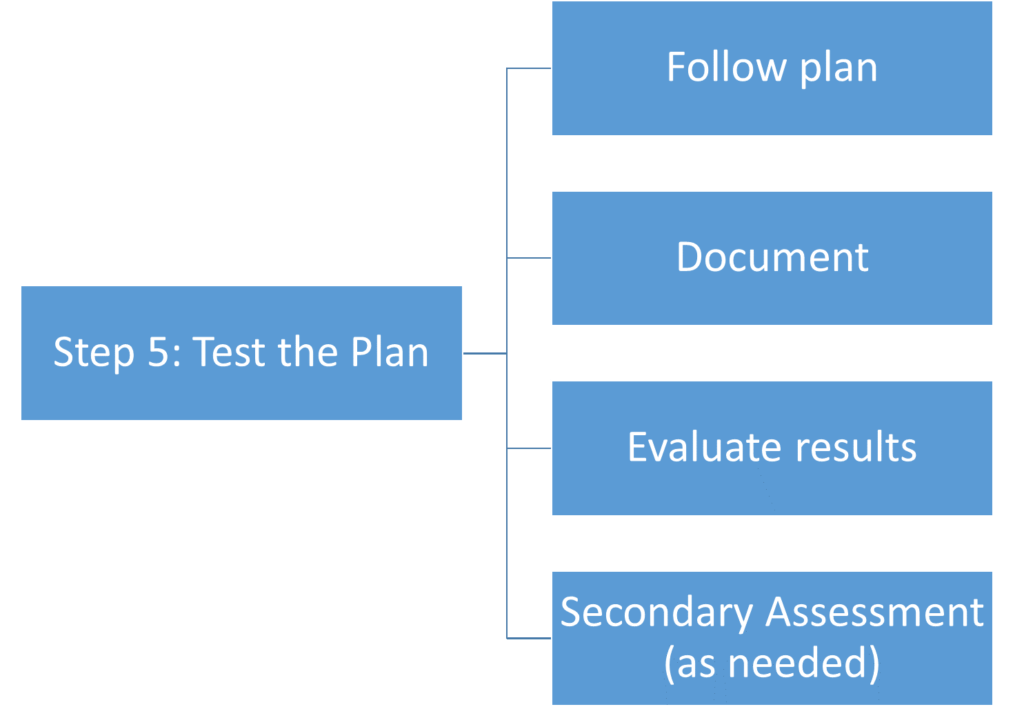

Step 5. Test the Plan

As mentioned above, the timing of tests is important. Attempt to test all devices on a single criteria at a time, if possible, as this allows a more thorough comparison of how each device relates to others. When performing tests that require a comparison of images or sounds, attempt to gather the samples in as controlled of an environment as possible.

It can be useful to test the mechanical properties and usability of a device before moving to more specific tests. These first pieces of the assessment can create a better familiarity with each device, speeding up later testing processes that may require changing settings, or accessing advanced features of a device. Two (or more) devices can be designed with very similar purposes, but their actual use and operation can vary widely.

Organization is key to keeping a test orderly and running in a timely fashion. Using labeled boxes can help keep cables, connectors, and devices from getting mixed up. Much time can be lost if a single, critical component goes missing due to poor organizational processes.

Many organizations may choose to perform qualitative assessments when evaluating devices, as there may be a lack of time, resources, or expertise to thoroughly run a quantitative examination. If this is the case, be clear up front with testing staff as to what the expected level of detail is in the results. Should, however, quantitative testing be an option, ensure that strict controls are in place to produce balanced, fair results.

Document all of the testing results, using a format that is similar to the requirements comparison table, if possible. This helps provide clarity when reviewing the goals of the testing process with the actual results.

Result Evaluation

After the assessment is completed, gather the testing team to discuss the numbers. Having the devices on hand can make the discussion more useful, as discrepancies in opinion and rating can be addressed with the device present. This makes it possible to clarify any questions that may arise about why certain scores were given for any test.

Determine how differences in scores will be resolved. In some cases, original entries may be modified based upon additional discussion (should, for example, a better understanding of the device, test, or rating criteria be reached). Decide if all scores will be averaged, if any weighting will be given to one category over another, and compile the results.

From the list of reviewed products, make a short list of devices that meet the requirements and that scored highly in the testing process.

Secondary Assessment

Some organizations will choose to stop testing after one round of evaluations. It can be useful to instead take the top-performing devices and run additional tests. These tests may include input from additional user perspectives, including clinicians who may use the products, or may simply be a more in-depth evaluation by the initial testing team. It may also be an appropriate time to perform quantitative tests on the subset of devices, as these more time-intensive tests can be more readily performed on fewer units.